Garnet’s first blog post revisited the lasting effort in the group to do wavefunction based correlation calculations in crystals. As he pointed out, many of our research projects are trying to push the boundaries of quantum chemistry and take years to complete (or not). In this post, I’ll write about our ongoing quest to understand high-temperature superconductivity, which has been an exciting journey since the beginning.

Garnet’s interest in strongly correlated materials dates back long ago, but he finally started seriously working in this field in 2010 with Dominika Zgid, then a postdoc in the group. They initially visited dynamical mean-field theory (DMFT), which, among all other things, is a framework one can easily plug in quantum chemistry methods. Their work resulted in the increased popularity of quantum chemistry impurity solvers, e.g., truncated CI solvers in the DMFT community [1]. But more importantly, many problems, such as the infinite expansion of the bath and the complexity of working with Green’s function, became the motivation to find an alternative “DMFT” method based on wavefunctions.

This led to the invention of density matrix embedding theory (DMET) — the paper by Gerald Knizia and Garnet [2]The new approach, while embracing the essential idea of embedding from DMFT, improved the overall numerical tractability by switching the primary variable of interest from the Green’s function to the ground-state wavefunction.

With this powerful new tool, it became possible to study the ground states of various interesting model systems. The group worked on both applications of the method, such as the honeycomb Hubbard model [3] and transition metal oxides, as well as methodological extensions, such as the treatment of spectral properties [4], electron-phonon coupling [5] and molecular calculations [6].

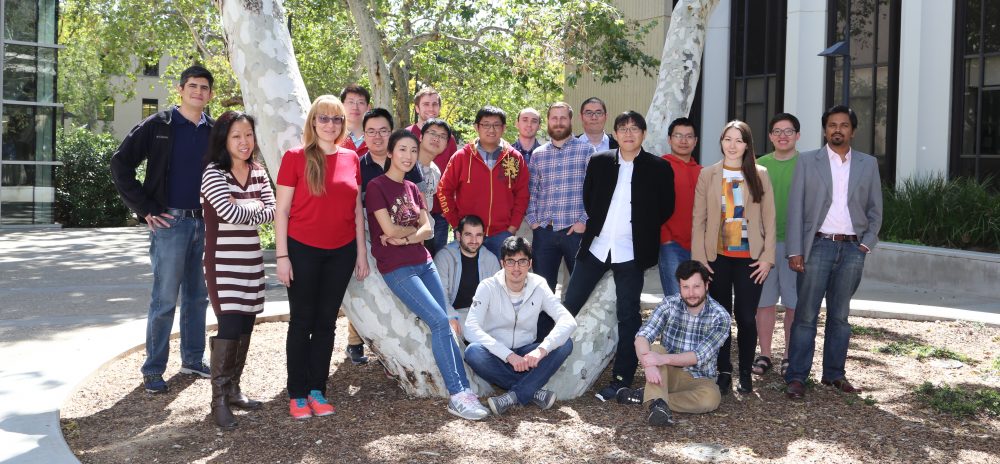

I joined the group during this period. As an initial project, I worked with Qiaoni Chen and Barbara Sandhoefer, both postdocs in our group, to study transition metal oxides, hoping to find the metal-insulator transition boundary. Unfortunately, we found that it was difficult to precisely characterize the boundary due to various convergence problems, and the work was never finished. However, it was a great exploration into various numerical aspects of DMET and was a very useful experience for our future work in tackling these problems.

At the later stage of that project, Garnet and I were also thinking about extending the kinds of systems which could be studied using DMET. After a long and painful process of searching, we realized that DMET could easily incorporate broken particle-number symmetry wavefunctions, which means we could study superconductors directly. Though the physics was clear, it took quite a long time to work out the actual math. At first look, the number of bath orbitals seemed doubled with BCS wavefunctions. Only after detailed analysis, did I find that the Schmidt decomposition of a normal state Slater determinant is equivalent to finding its single-particle and singe-hole spectra relevant to the impurity. For BCS wavefunctions, these excitations are just mixed together. This leads to a way to construct the impurity model with a BCS wavefunction that gives the correct number of bath orbitals.

As I mentioned, studying high-temperature superconductivity is one of the goals Garnet was particularly interested in, and now we could actually model it!

The implementation was a quite a bit of work. I wrote a new DMET code to fix the issues discovered in our transition metal project, and to provide a more flexible interface — one that could treat both normal and BCS, and spin-restricted and unrestricted wavefunctions in the DMET calculation. One of the key theoretical improvements was to introduce a chemical potential to reduce the discrepancy between the impurity and the mean-field numbers of electrons. In addition, I started to use the Block DMRG code (extended for particle-number nonconserving wavefunctions) as the impurity solver, making it possible to deal with larger impurities. Later on, AFQMC and CASSCF solvers were also added.

Finally in mid-2014, we obtained the first numbers for the 2D Hubbard model in a minimal impurity (2×2). The results looked both encouraging (we could see superconducting domes and antiferromagnetic orders) and frustrating (the antiferromagnetic order was way too strong). While we decided to try larger impurities as well, we also presented the results in conferences and workshops, hoping to get input from people more familiar with the condensed matter world.

It was the Simons Collaboration on the Many Electron Problem where we got the most help. During meeting with scientists there, we got positive feedback and many useful suggestions. We decided to run 4×4 impurity calculations on the Hubbard model — that was hard even with DMRG, as the impurity model would have 32 orbitals without definite particle number. We had to develop a series of extrapolation techniques, and finally, when everything was combined to get the single number — the energy, it “magically” agreed with the best available data! At the same time, the phase diagram looked much more reasonable. Additionally, we found strong evidence for the long-suspected stripe phases as well. The results basically told us that the claim that DMET converges to the exact results with increasing impurity size is not a formal statement, but something that can be applied even to small cluster calculations to get a good estimate of physical quantities in the thermodynamic limit! Honestly, I was surprised by the results [7].

After the initial success, we revisited the 2D Hubbard model after noticing the Hubbard stripe paper by Philippe Corboz. His iPEPS calculations indicated a low energy stripe state of wavelength 5 in the underdoped region of the phase diagram. Our DMET calculations confirmed this state, and in addition, found even lower energy stripes with wavelengths 6 to 8. In the process, we had frequent communications with Philippe, Steve White, Shiwei Zhang, Georg Ehlers and Reinhard Noack, leading to a large collaboration project. In the end, all the different ground-state methods independently simulated stripes of various wavelengths and obtained consistent energy landscapes for stripes of different wavelengths. For the first time, we were able to determine the ground state of a traditionally interesting point in the 2D Hubbard model with great confidence. (This work has been posted on arXiv [8].)

The success of DMET in treating the 2D Hubbard model was both surprising and reassuring, but it will be a bigger and more exciting challenge to study real materials . As an intermediate step, Ushinish Ray, a postdoc in the group, and I are working on the three-band Hubbard model, and the periodic infrastructure Garnet described in the last post would greatly contribute to the further study of realistic cuprates. We may see the first DMET calculation of cuprates very soon!

Reference:

[1] D. Zgid, E. Gull, G. K.-L. Chan, Phys. Rev. B 86, 165128 (2012).

[2] G. Knizia, G. K.-L. Chan, Phys. Rev. Lett. 109, 186404 (2012).

[3] Q. Chen, G. Booth, S. Sharma, G. Knizia, G. K.-L. Chan, Phys. Rev. B, 89, 165134 (2014).

[4] G. Both, G. K.-L. Chan, Phys. Rev. B 91, 155107 (2015).

[5] B. Sandhoefer, G. K.-L. Chan, Phys. Rev. B 94, 085115 (2016).

[6] Q. Sun, G. K.-L. Chan, J. Chem. Theory Comput. 10, 3784 (2014).

[7] B.-X. Zheng, G. K.-L. Chan, Phys. Rev. B 93, 035126 (2016); Simons Collaboration on Many Electron Problems, Phys. Rev. X 5, 041041 (2015).

[8] B.-X. Zheng, C.-M. Chung, P. Corboz, G. Ehlers, M.-P. Qin, R. M. Noack, H. Shi, S. R. White, S. Zhang, G. K.-L. Chan, ArXiv:1701.00054 (2017)